Introduction

The smile is a common expression used in the show of emotions when socially interacting (Neidenthal, Brauer, Halberstadt, and Innes-Ker, 853-864). There can be aestheticism in smiles, that is, association to occasions of joy in the emotional status of a person expressing a smile. However, on other occasions, a smile can be deceptive and false. People can deliberately fake smiles when joyful emotions are absent for the deception of others (Chartrand and Pierre 179-189). The automated EEG system has since taken a new dimension in playing a role in the detection of smiles. The EEG equipment is capable of showing and transmitting critical signals and features in a manner that is user-friendly. Through a quantitative analysis of the computerized signals, it becomes possible to make temporal measurements of the features of transmitted signals. It also allows for the filtering and analysis of the produced components for the final analysis of the signals. Through quantitative analysis, it is possible to the timings for EEG reviews and allows for effective and accurate detection of a smile through simple metrics. Such accuracy allows for the avoidance of confusion of different kinds of smiles giving room for more accurate identification of a smile and a clear differentiation between fake and authentic smiles (Anderson and Wisneski 166-191).

Spontaneous Smiles

There is a long time of consideration of spontaneous smiles as expressions of positive emotions like pleasure, happiness and enjoyment. This follows the theory of discrete emotions, which claims that there is an intrinsic linkage that smiles have to feel and the manner of showing of facial expressions (Hao, Fujita, Kohira, Fuchigami, Okubo, and Harada 68-73). Spontaneous smiles involve facial expressions while false smiles are marketing, social and polite ventures for convincing others that there is enjoyment when that is not the case. Through natural activation of zygomaticus muscles voluntarily, most people can move the muscles of the upper face giving way to spontaneous smiles. There is a resemblance between an authentic and a fake smile through the fake smile is of high asymmetry that the authentic smile. It is also apparent that an authentic smile makes more use of the cheek raiser than a fake smile. Adults often have the knowledge of the differences in smiles and can perceptively detect the differences (Ahn, Bailenson, Fox, and Jabon 1).

However, there is an age difference in the recognition of spontaneous and fake smiles with older people having a high capacity of recognizing spontaneous and fake smiles than young people (Murphy, Lehrfeld, and Isaacowitz 811-821). Therefore, like Murphy, Lehrfeld, and Isaacowitz (811-82) assert, there is a higher advantage of older people in recognition of different types of smiles in comparison to the recognition capacity of younger adults. The recognition of spontaneous smiles depends on the ability of the recognition and distinction between true and positive emotional experiences (Okubo, Kobayashi and Ishikawa 217-225). The ability to make such recognitions is the enhancement of effective interpersonal relations. The knowledge of making judgments regarding the fakeness and authenticity of a smile goes beyond smile observation and facial clues and requires a more technical means of detection (Chartrand and Pierre 179-189).

EEG and EMG smile detection

Smile detection depends on the metalizing capacity of people. It has the ability of people to recognize the desires, emotions and beliefs of people with partial dependency on correct anticipation and prediction of the presented facial dynamics expressing emotions because of experiences and beliefs. Through the records made on the activities of the EEG, it is possible to gain neural process information (Shoeb, Pang, Guttag and Schachter 157-172). The accurate detection of the feelings of others is an effort for the development of anticipatory reactions of others. The neuron system of the brain is the core position for metalizing of information. The ability to judge a smile depends on the capacity of an individual of perceiving factors (Chartrand and Pierre 179-189). However, the detection is not on limitations of facial clues, as they are necessary for the initiation of the assessment of a smile. Continued exposure to meaningful stimuli creates elaborative mental associations connected to the stimuli and intensifies original attitudes towards it in circumstances of minimal exposure. During moderate exposure to a stimulus, people have the chance of scrutinizing the stimuli associated with ideas and images from drawn inferences that consolidate the attitudes of people regarding the stimuli.

EEG smile detection is a development for the provision of a system with reliable detection of smiles (Messinger, Cassel, Acosta, Ambadar and Cohn 133-155). There is always the presence of real and fake smiles but the limitations of observations prevent the correct determination of the factor. However, through an automated system, automatic detection of smiles produces accurate information. The automated EEG smile detector system is a development in recognition of smiles through its ability to detection of spontaneous smiles successfully. It provides new information relevant to the detection of smile behaviors less known initially helping in the detection of spontaneous and fake smiles producing a reasonable percentage of accuracy. Future research should have a deeper focus on the effects of emotional stimuli in the detection of smiles without simple dependence on self-reports. The datasets used for the study were small and are in lack imaging variability useful for comprehensive smile recognition. The images required a combination of variables such as age, ethnicity, facial hair, gender and glasses. Incidentally, there is the absence of ethnic diversity in imaging databases for the detection of facial expressions and smiles. Researches show that there is an existence of low detection of smiles in detection application on dark-skinned people as compared to light-skinned people. There are limitations on recordings of such biases in research for automated smile detection creating a gap in smile detection (Witvliet and Vrana 3-25).

Methodology

The study required the recording of participants under three experimental trials. We employed a machine detecting system approach in two stages. In the initial stage, the automated machine was used for the detection of smiles and the recorded data was taken to a second machine for the analysis of the smiles and classification under fake or spontaneous smile grids. The study had 3 trials and 9 subjects for the study of the automation of the EEG smile detection. The participants volunteered their participation in the study. For the study of spontaneous and evoked smiles, 4 participants were evaluated on real natural situations through exposure to joyous moments with the other asked to make a posed smile.

Import dataset

For the sake of the study, a dataset of face nine participants with their faces in close resemblance to the operational condition for the targeted application and an automated smile detector. The datasets span a range of facial expressions in different kinds of settings. Dataset images having prototypes of smiling faces were manually recorded. This ranged between happy, unhappy and unclear labeling. After the encodings, only the faces marked as happy and unhappy were used with those labeled as unclear discarded. The images were then registered through rotation and cropping for the scaling of the face on the aye areas for automatic detection of smile accuracy (Messinger, Mahoor, Chow, and Cohn, 285-305).

Extract Epochs

The detector recorded their reactions in different score zones and timings for the datasets. All available epochs were removed and baseline data for eye observation focused on the study of real smiles versus baselines after, which the same study was run for evoked smiles versus baselines and later, eliminated to provide effective filtering of the data. This allowed for the evaluation of evoked smiles versus the real smile

Reference channels to A1

The recorded data was then channeled to A1 after being devoid of any epochs for the study of spontaneous records of smiles. There was the recording of electromyographic (EMG), at the zygomatic sites followed by their amplification after, which there was a rectification and integration of sign

Remove baseline

The automated detector was used to gather data from the participants of arousal ratings and zygomatic data. Data collection was through the binaural presentation of stimuli through headphones. The process consisted of the recording of the amplitudes of the smiles through different timing spans using automated EEG equipment.

Filter data

The epochs surrounding the voluntary movements were then averaged and the produced ERP waveform had a characteristic long negative shift that slowly increased before the movement registered. This effect comes from the central-parietal part in the brain.

Occular Correction using EOG

The multiplicity of processes exists in the brain and these present constraints on EEG generation. For the elimination of the non-specifics irrelevant to the study of smiles, only components of importance were left after the elimination of other physiological processes. There was a repetition of the experiments for the surrounding sections of the EEG events (Epochs). These were later arranged together and averaged with an assumption, that the Epochs were time-locked within respective events of smiles.

Reject epochs with abnormal values

The models used in the study of the dynamism of smiles yielded the estimates for the analyzed subjects to use in the analysis of the effectiveness of the automatic smile detection equipment (Bae 127).

Run ICA

When the ICA run the resulting ERPs produced different recordings from different channels within the different timings. There were recorded differences in the outputs with a clear variation in the participants’ expression of evoked and spontaneous smiles.

Plot ERPs

For the proper averaging of the ERP, we ensured that the relationship between the selected epochs and events (smile) was consistent. Using the ‘Bereitschafts’ Potential (BP), a reproducible, robust and slow negativity helped in the production of brisk movements similar to a smile in a leisurely manner.

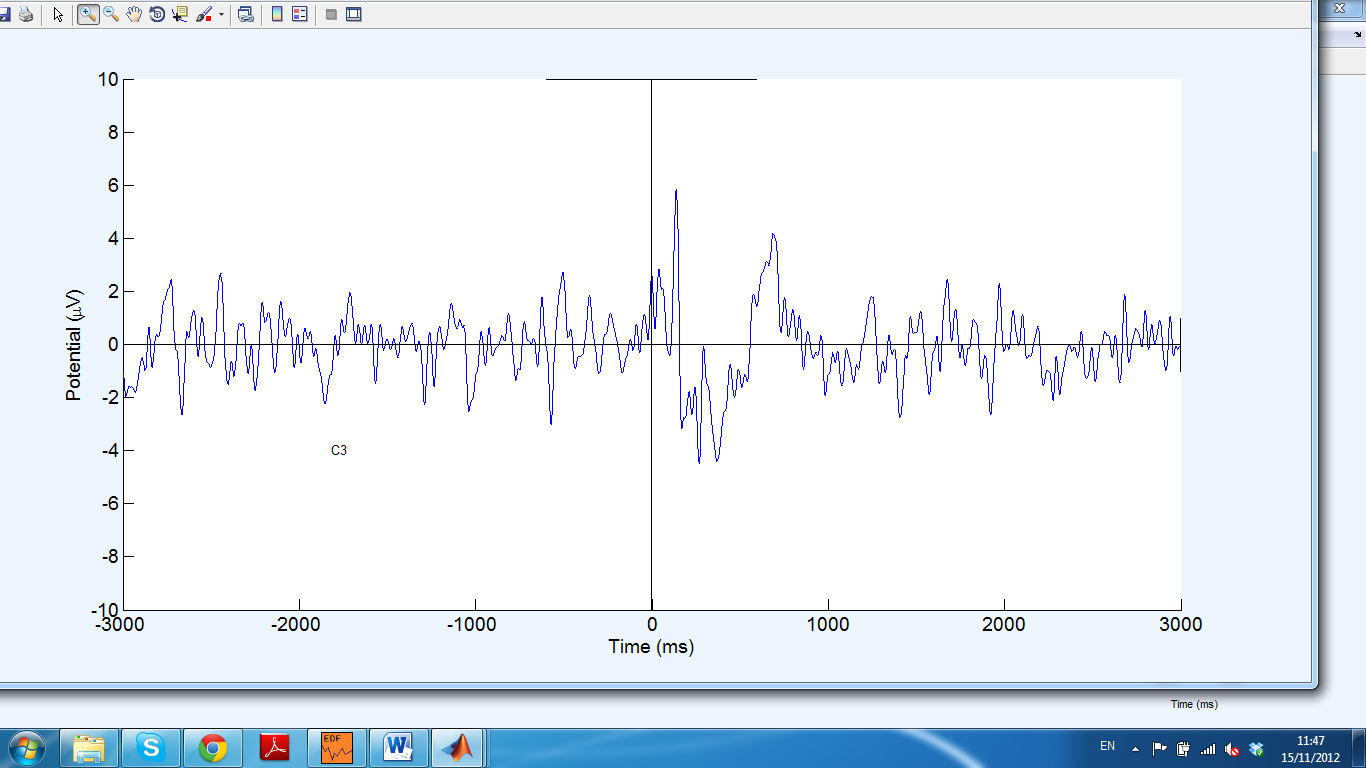

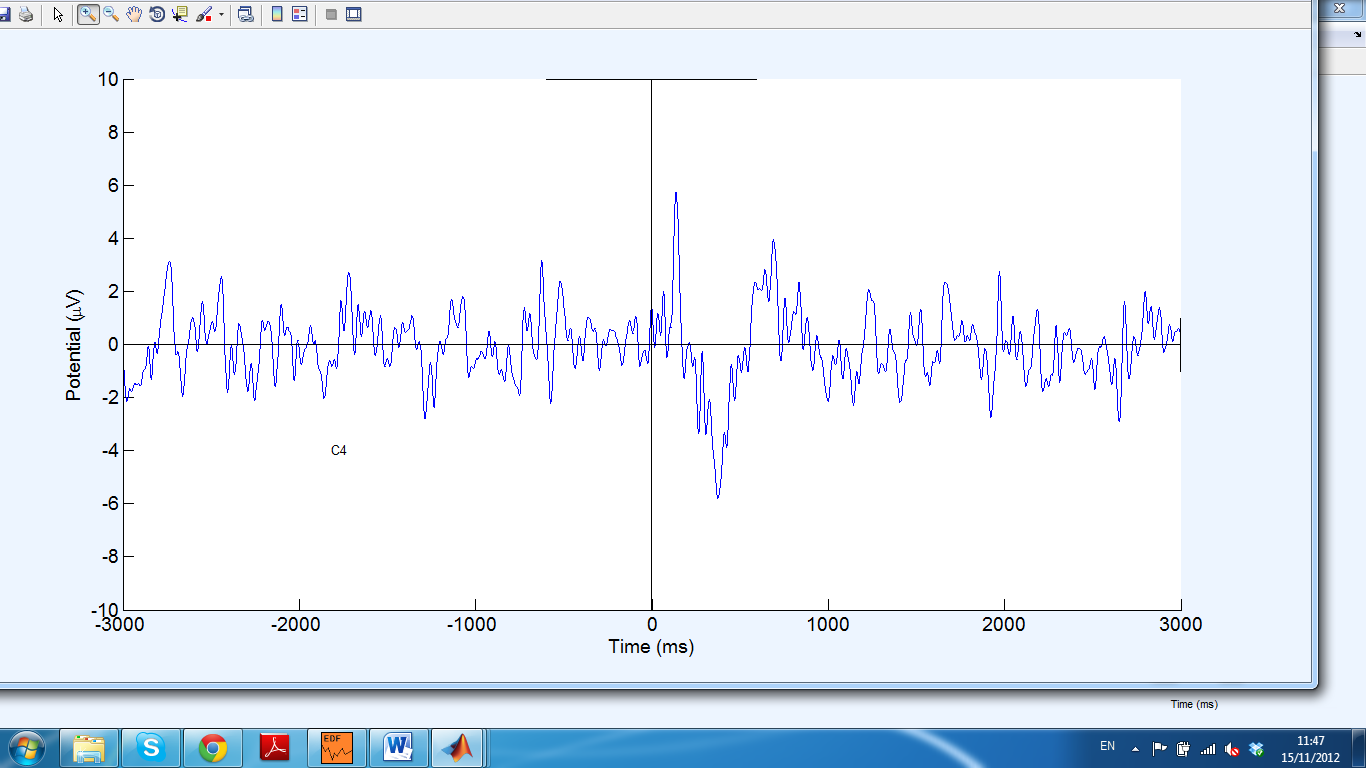

From channels C3 and C4 a negative peak is observed after the onset

The differences existing between evoked and real smiles were examined and the differences between channels c3 and c4 were computed.

Plot channels C3 and C4 time-frequency maps

Plot channels for C3 and C4 time frequencies were done to study the amplitudes of the smiling of subjects. This showed an increase whenever subjects smiled.

Channels C3 and C4 properties

Finally, the properties of C4 and C3 were recorded.

System Applications

Automated detection of various waveforms by psychoanalysts studies different aspects of signal in clinical medicine processing. There are a number of proposed methods of neural-based analysis of signals in an automated manner (Song 788-796). The capability of the EEG in the detection of true and false electrical activity in the brain makes it a powerful tool in the neurology field for the detection of different types of smiles and the possible causes of the given results. It has the capability of determining the electoral production of smiles even in facial paralysis situations. This follows EEG recordings and inspection of the signals for the validation of various situations such as sleep disorders, epilepsy and facial expressions (“Lay Abstracts” 228-232).

During instances of involuntary jerking of muscles, a condition known as myoclonus takes place. This also takes place if such movement ceases and it releases different physiological results depending on the situation. Through EEG, it is possible determining the cause of myoclonus for determination if it is spinal, cortical or subcortical.

Results

There is a liking for positive stimuli than negative stimuli producing various physiological variables. This produces zygomatic muscle activity, which equates to a smile with positive stimuli producing high EMG reactions than negative stimuli. This is consistent with the literature that links EMG (corrugators) brow and the valence of emotions influence of smile detection. The research found that working with datasets of facial expressions requires precise eye registration for accuracy. The absence of accuracy in eye alignment led to a smile detection inaccuracy during the training of the datasets. The automatic smile detector takes advantage of various smile dynamics to estimate smile intensity than human estimates. The study of real versus faked smiles shows exaggeration during fake smiles with the comparison of human records producing slow reports with frequent experiences of burnout in observers.

From channels C3 and C4 a negative peak is observed after the onset

Discussion

The research focused on the elucidation of the EEG System for Automated Smile Detection through the analysis of the possibilities of spontaneous and evoked smiles. Various stimuli evoke emotions, which result in smiles and they have a key role in the determination of smile detection. The perceptions of people determine their differentiation of the different forms of smiles dependent on the influencing exposures. Participants were categorical in the identification of smiles as spontaneous or evoked. Smile detection of EMG leads to the observation of images and recording of the result by participants with a clear indication of the influences that promoted the categorization of a smile as spontaneous or evoked. Because of the complexities of perceptions, and the effects of previous experiences, the differences in individuals produce different results for different images. This is contrary to the results of the EEG automated system, which works on the precept of standardization of ranking for the observations, hence the production of standardized amplitude recordings in timing variations (Witvliet and Scott 3-25). The intentions of the research were not for the mere detection of evoked and spontaneous smiles. It also focused on the demonstration of the ability of the automated EEG smile detector system to detect smiling behavior, which observation through the eyes may not recognize. It also focused on the differentiation of the types of neural influences of the different kinds of smiles. Therefore, it is a promising prospect for answering various questions as pertains to the behavioral influencers of smiles controlled by the neural system, and thus opening a field for future research.

Conclusion & Further Research

The EEG automated smile detector field is advancing to a point for the application of addressing scientific behavioral sciences. Through the description of the efforts of pioneering the differentiation of fake and real smiles, the study sets a base for the application of fully automated smile detection. There is low accuracy of distinction by naïve human beings regarding fake and spontaneous smiles, while the automated system gives a relatively higher accuracy under the experiments (Ambadar, Cohn, and Reed 17-34). Through the application of a machine analysis, samples of spontaneous smiles during the study were analyzed for a better understanding of the presence of real and fake smiles. There is a need for the study of the full concept of the reasons (cues) and the behavioral effects of the differences created by age demographics causing the discrimination of recorded positive expressions. It is, further, important to study how adults manage to differentiate between spontaneous and false smiles and whether it has any positive contribution to their social interactions.

The study of smiles in poses from the approximation of frontal views was the focus of this paper. The EEG automated smile detection system is an advancing approach that most researchers employ to understand through the coding of facial expressions. The experience of the development of a smile detector is an indication that future researches need to capture the variables illuminating personal characteristics within practical paradigms of advanced understanding of smiles and other facial expressions.

References

Ahn, Sun Joo, Jeremy Bailenson, Jesse Fox, and Maria Jabon. “Using Automated Facial Expression Analysis For Emotion and Behavior Prediction.” Paper presented at the annual meeting of the NCA 95th Annual Convention, Chicago Hilton & Towers, Chicago, IL, 2009. Web.

Ambadar, Zara, Jeffrey Cohn, and Lawrence Reed. “All Smiles Are Not Created Equal: Morphology and Timing of Smiles Perceived As Amused, Polite, and Embarrassed/Nervous.” Journal of Nonverbal Behavior 33.1 (2009): 17-34. Academic Search Complete. Web.

Anderson, Nicholas R. and Kimberly J. Wisneski. “Automated Analysis and Trending of The Raw EEG Signal.” American Journal of Electroneurodiagnostic Technology 48.3 (2008): 166-191. CINAHL Plus with Full Text. Web.

Bae, Myungsoo. “Automated Three-Dimensional Face Authentication & Recognition.” Arizona State University, 2008. ProQuest Dissertations & Theses (PQDT). Web.

Chartrand, Josée, and Pierre Gosselin. “Judgement of Authenticity of Smiles and Detection of Facial Indexes.” Canadian Journal of Experimental Psychology = Revue Canadienne De Psychologie Expérimentale 59.3 (2005): 179-189. MEDLINE with Full Text. Web.

Hao, Noriko; Yukihiko Fujita, Ryutaro Kohira, Tatsuo Fuchigami, Osami Okubo, and Kensuke Harada. “Abstract” Selected Papers: Clinical Research. Epilepsia 46.1 (2005): 68-73. Academic Search Complete. Web.

Neidenthal, Paula M., Markus Brauer, Jamin B. Halberstadt, and Åse H. Innes-Ker. “When Did Her Smile Drop? Facial Mimicry and the Influences of Emotional State on the Detection of Change in Emotional Expression.” Cognition & Emotion 15.6 (2001): 853-864. Business Source Complete. Web.

“Lay Abstracts.” Clinical Science. Epilepsia 47.2 (2006): 228-232. Academic Search Complete. Web.

Messinger, Daniel S., Mohammad H. Mahoor, Sy-Miin Chow, and Jeffrey F. Cohn. “Automated Measurement of Facial Expression in Infant-Mother Interaction: A Pilot Study.” Infancy 14.3 (2009): 285-305. Academic Search Complete. Web.

Messinger, Daniel S., Tricia D. Cassel, Susan I. Acosta, Zara Ambadar and Jeffrey F. Cohn. “Infant Smiling Dynamics and Perceived Positive Emotion.” Journal of Nonverbal Behavior 32.3 (2008): 133-155. Family Studies Abstracts. Web.

Murphy, Nora A., Jonathan M. Lehrfeld and Derek M. Isaacowitz. “Recognition of Posed and Spontaneous Dynamic Smiles in Young and Older Adults.” Psychology and Aging 25.4 (2010): 811-821. MEDLINE with Full Text. Web.

Okubo, Matia, Akihiro Kobayashi and Kenta Ishikawa. “A Fake Smile Thwarts Cheater Detection.” Journal of Nonverbal Behavior 36.3 (2012): 217-225. Academic Search Complete. Web.

Shoeb, Ali H., Trudy Pang, John V. Guttag and Steven Schachter. “Non-Invasive Computerized System for Automatically Initiating Vagus Nerve Stimulation Following Patient-Specific Detection of Seizures or Epileptiform Discharges.” International Journal of Neural Systems 19.3 (2009): 157-172. Academic Search Complete. Web.

Song, Yuedong. “A Review of Developments of EEG-Based Automatic Medical Support Systems for Epilepsy Diagnosis and Seizure Detection.” Journal of Biomedical Science & Engineering 4.12 (2011): 788-796. Academic Search Complete. Web.

Witvliet, Charlotte V. O., and Scott, R. Vrana. “Play It Again Sam: Repeated Exposure to Emotionally Evocative Music Polarises Liking and Smiling Responses, and Influences Other Affective Reports, Facial EMG, and Heart Rate.” Cognition & Emotion 21.1 (2007): 3-25. Academic Search Complete. Web.